Categorical systems theory

Categorical systems theory is the study of the design and analysis of systems using category theory. Category theory is a hopelessly abstract branch of pure mathematics, so abstract that even the other mathematicians refer to it as “abstract nonsense”. Why would anyone want to study such complex and concrete situations as general systems with a branch of mathematics so divorced from reality? In this blog post, I hope to answer that question. I want to say what the point of doing systems theory categorically is; what the main take-away lesson is, beyond the technicalities. I also want to show that category theory isn’t divorced from reality at all; it’s just general purpose.

Categorical systems theory is the study of the design and analysis of systems using category theory. Category theory is a hopelessly abstract branch of pure mathematics, so abstract that even the other mathematicians refer to it as “abstract nonsense”. Why would anyone want to study such complex and concrete situations as general systems with a branch of mathematics so divorced from reality?

In this blog post, I hope to answer that question. I want to say what the point of doing systems theory categorically is; what the main take-away lesson is, beyond the technicalities. I also want to show that category theory isn’t divorced from reality at all; it’s just general purpose.

I don’t want to waste your time, so here is the main idea of categorical systems theory: when designing or analyzing systems, we should keep a clean separation between the internals of a system and its interface with its environment. Here are the basic principles:

- Generally, systems don’t exist in isolation. A system can always interact with its environment. However, when we model systems, we should be precise about exactly how a system can interact with its environment. We call this precise way that the system can interact with its environment the interface of the system.

- Systems should only interact with each other through their interfaces. Otherwise, it becomes very difficult to reason about how combined systems will behave.

- Therefore, from outside the system, all we need to know is what is going on at the interface. We can then give guarantees on system behavior that only mention the interfaces. So long as we know how the interfaces can interact, we can understand the possible ways that systems can interact.

Now, these ideas weren’t invented by category theorists. In fact, the idea that we should cleanly separate the things a system does inside from the way it interacts with its environment through an interface is common practice in computing (both in hardware and in software). This clean separation allows for modularity — systems can be designed component by component, and then the components can be put together to get more complex systems.

But note that I didn’t say what I meant by system here. By “system” I could have meant a circuit, whose interface consists of the ports that are left exposed to the environment. Or I could have meant a software library whose interface is an API. Or I could have meant a system of differential equations, whose interface is composed of its free parameters and some of its variables to be used to set other parameters down the line. Or I could have meant a Markov decision process, whose interface is the menu of actions an agent can take. Or I could have meant a Petri net, whose interface is a set of species which can be involved in interactions with other Petri nets down the line. Or I could have meant an epidemiological model, whose interface involves the flow of people between locations. Or I could have meant an ecosystem, whose interface involves the flow of animals and energy through its boundary. Or I could have meant…

Everyone has their own notion of “system”, depending on what they are studying or designing at the moment. The reason we use a general mathematical framework like category theory is because systems theory is general. The lessons of categorical systems theory apply to any sort of system, no matter what it is. And category theory tells us how to formulate properties of combined systems in terms of how they interact through their interfaces. This is because, at its heart, category theory is the algebra of composing things.

1 A bit of history: Ordinary categories

Category theory was not invented to put systems theory on solid mathematical grounds. It was invented — in the early 1940’s — in order to make sense of calculations in homological algebra. Really, it was invented to describe when two constructions in homological algebra were “naturally equivalent”, and therefore could be replaced by each other in long calculations with many moving parts.

In order to organize these big calculations, Eilenberg and Mac Lane introduced the notion of a category. A category is an abstract algebra of functions, just like a vector space is an abstract algebra of vectors. The “vectors” in a vector space can be anything; what matters is that you can add them and scale them. Similarly, the “functions” in a category can be anything; what matters is that you can compose them. But while you can add any two vectors, you can’t compose any two functions; you need the domain of the second to equal the codomain of the first.

If you’ve taken a few calculus classes, you know that functions can often be expressed by formulas, like f(x) = \frac{1}{1 - x^2}. Functions can be composed by plugging in. If we have another function g(x)=x^3 then the composite function is (f \circ g)(x) = \frac{1}{1 - (x^3)^2} = \frac{1}{1 - x^6}. This may seem like all there is to composition, but there’s a hidden difficulty here. What if we have the constant function h(x)=1? Then the composite (f \circ h)(x) = \frac{1}{1 - 1^2} = \frac{1}{0} doesn’t make any sense! This is because 1 is not in the domain of f(x) — it doesn’t make sense to plug it in for x.

In calculus class, you might have been asked to “find the domain” of a function; this was just an exercise in figuring out when a composite could possibly make sense. You might have been asked to find the domain of a composite if you know the domain of each component. But this gets complicated fast, and you really have to know the specifics of how your functions work to figure this out. If you mess up, you get gibberish.

Category theory takes a different approach: we say that part of what it means to be a function is that it has a well defined domain and codomain. We could write f\colon\mathbb{R}-\{-1,1\}\to\mathbb{R} to say that the domain of f is the set of real numbers except 1 and -1, and that the codomain of f is all of \mathbb{R}. This gives us a guarantee on the use of f: whenever g\colon X\to\mathbb{R}-\{-1,1\} is any function whatsoever with codomain the domain of f, the composite f\circ g\colon X\to\mathbb{R} is well-defined, and it has the domain of g and the codomain of f. We do the work up front when we define our function f, and then get guarantees on all future behavior no matter what we compose in (or out).

This idea of requiring that functions have well defined domains and codomains was a conceptual innovation of category theory, way back in the 40’s. It cleanly separated a function from its interface with other functions.

This idea of giving an interface to functions is very much the same idea that leads us to use data types while programming. By keeping track of the types of inputs to a method and the return type, we can catch errors at compile time that would have resulted in gibberish at runtime. This is why category theory has been so useful in the study of programming languages.

2 What do functions have to do with systems

The “functions” in a category are abstract things — all that is necessary is that they have domains and codomains (which could themselves be any sorts of things), and that you can compose them (satisfying some axioms). This means that f\colon A\to B could, in principle, be something entirely un-function-like — so long as you could compose things like this.

We can even take f to be a system whose input interface is A and whose output interface is B! Then if we have another system g\colon B\to C whose input interface is B and whose output interface is C, we can compose them to get a system g\circ f\colon A\to C whose input interface is A and whose output interface is C.

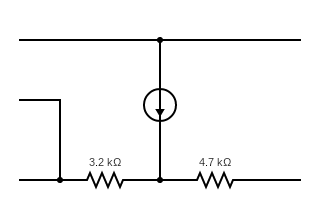

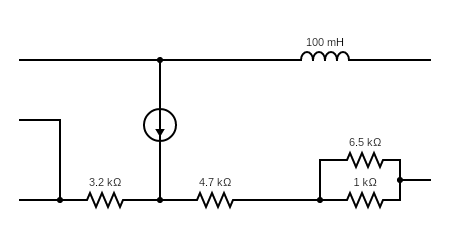

For example, f could be a circuit diagram with three dangling wires A on the left, and two dangling wires B on the right:

And g could be a circuit diagram with 2 dangling wires B on the left, and no dangling wires C on the right:

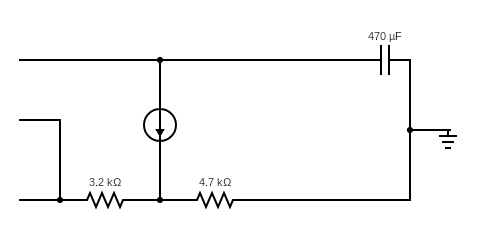

By plugging the output wires of f into the input wires of g, we get the composite system g\circ f:

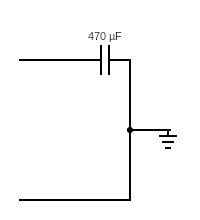

But we didn’t have to plug f into g, we could have plugged it into h\colon B\to D:

Then we’d get h\circ f\colon A\to D:

Of course, I could have told this story with Markov processes instead of circuit diagrams. Or labelled transition systems. Or even systems of differential equations. Category theory is a precise and flexible mathematics for describing how to compose any sort of systems.

3 Composing behaviors: Functors

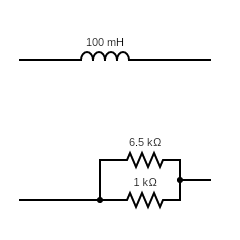

Circuit diagrams are one model of an electrical circuit which foregrounds the way that they are composed out of basic components. But to predict what a circuit will do, we need a model which foregrounds the quantities we might measure about a circuit. This situation is repeated across systems science — we often have presentations of systems which are easy to describe and read, and then models of those same systems which are more difficult to build and understand, but match the world more closely.

However, we can save ourself some trouble. If we agree to only measure our circuits at their terminals — only looking at how our systems behave on their interfaces — then we need to track a lot less information. We can, following John Baez and Brendan Fong, consider the Lagrangian relation between the input and output terminals of our circuits (which encodes the total power dissipated by the circuit). Then there is a way to black box a circuit into this relation between potentials and currents at the terminals. It doesn’t matter what a Lagrangian relation is precisely, just that it describes the physics of the circuit.

What this means is that if we have a circuit f\colon A\to B with input terminals A and output terminals B in the category of circuits, then we get a Lagrangian relation Rf\colon(\mathbb{R}A)\oplus(\mathbb{R}A)^*\to(\mathbb{R}B)\oplus(\mathbb{R}B^*) which relates the potentials (in \mathbb{R}A) and currents (in the dual (\mathbb{R}A^*)) at the input and output terminals. So Rf lives in a different category, the category of Lagrangian relations, and its domain and codomain in that category were calculated from the domain and codomain of f — the terminals of the circuit f.

The crucial property of this black boxing operation is that it preserves composition. That is, if we plug the circuit f\colon A\to B into the circuit g\colon B\to C to get the circuit g\circ f\colon A\to C, then we could calculate the Lagrangian relation R(g\circ f) of this combined circuit as a compose of the Lagrangian relations associated to each component Rg\circ Rf.

An operation R like this which goes between categories is called a functor, and the formula R(g\circ f)=Rg\circ Rf gives us a way of expressing constraints on the behavior of combined systems (R(g\circ f)) in terms of their parts (Rg and Rf).

4 What do categorical systems theorists do?

Hopefully I have given you a sense of how category theory can be useful in studying complex systems: we keep track of how the systems are interacting with other systems through their interfaces, build up more complicated systems by composing them along their interfaces, then study their behaviors in terms of the behaviors of their (simpler) components.

But what do categorical systems theorists actually do? There are two main thrusts of research in categorical systems theory which I might broadly characterize as “breadth focused” and “depth focused”.

The breadth focused research is about bringing more systems theories into the categorical fold. The generality of category theory means it can apply to any systems theory in which systems can interact through well defined interfaces. But though the idea of cleanly separated interfaces from internal behavior is well understood in the computing sciences, it is not widespread throughout science. So effort has to go into understanding what sorts of interface are appropriate for a particular sort of model of systems — what sort of category is appropriate for that theory of systems. Then mathematical work has to go into finding the functors which package up the behaviors and give guarantees of behaviors of composites in terms of guarantees on their components.

The depth focused research is about extending the main ideas of category theory to more faithfully model what an interface to a system can be and how they interact. This means going beyond basic categories to other related mathematical structures from category theory: symmetric monoidal categories, operads, double categories. Sometimes, new categorical structures have to be invented just for this purpose. The upside of this research is that the kinds of guarantees offered by the appropriate analogues of functors can more finely respect the ways systems can be put together.

Finally, and perhaps most importantly, a number of mathematicians and programmers are implementing categorical systems theory into software, so that these methods can be used to design and study complex systems built out of existing numerical and computer models. This is the work being done by the AlgebraicJulia group, supported in part by the Topos Institute.