Who is category theory for?

YouTube is our most powerful outreach tool. Yet how does it shape who we reach out to? And is this in line with the values of our community?

In late September, we published what I think is a wonderful introduction to category theory by educational designer Paul Dancstep. Viewers agree:

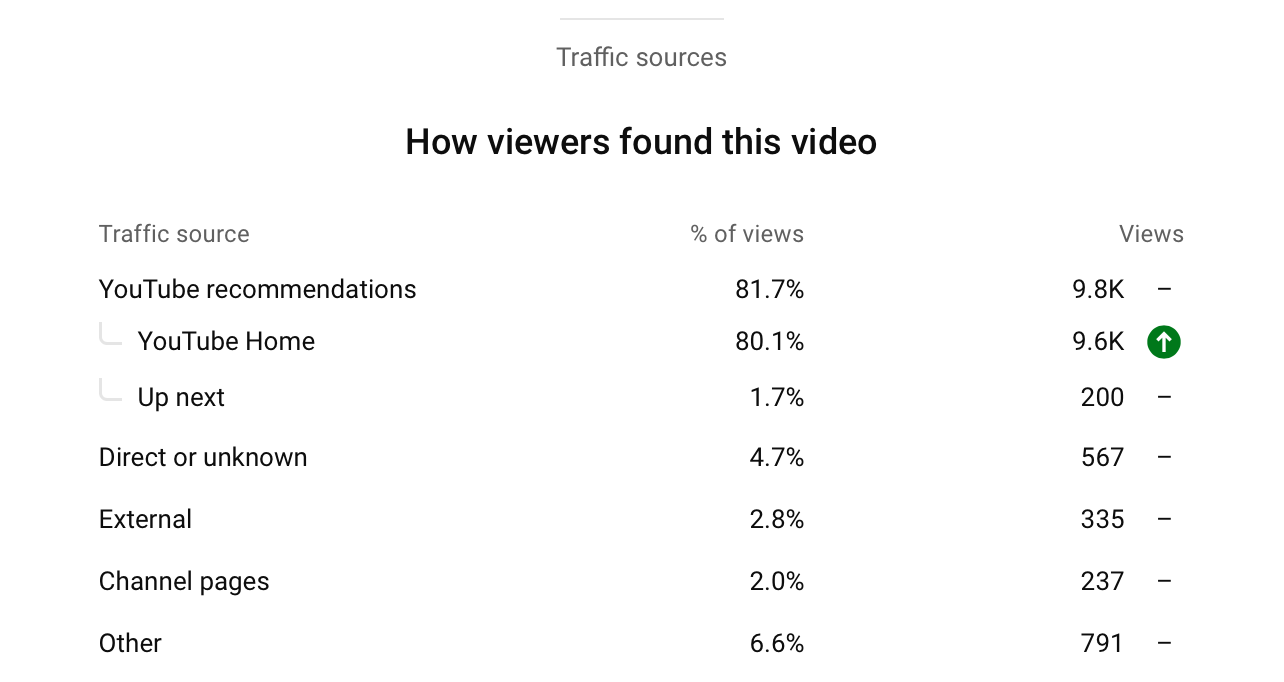

And YouTube does too, with an unusually high proportion of our views coming via YouTube recommendations:

This is wonderful: we love that so many people are learning about category theory! Category theory is a beautiful part of mathematics, and we believe it will be an important ingredient in deciphering the complexity of the systems around us, and shaping them for the better.

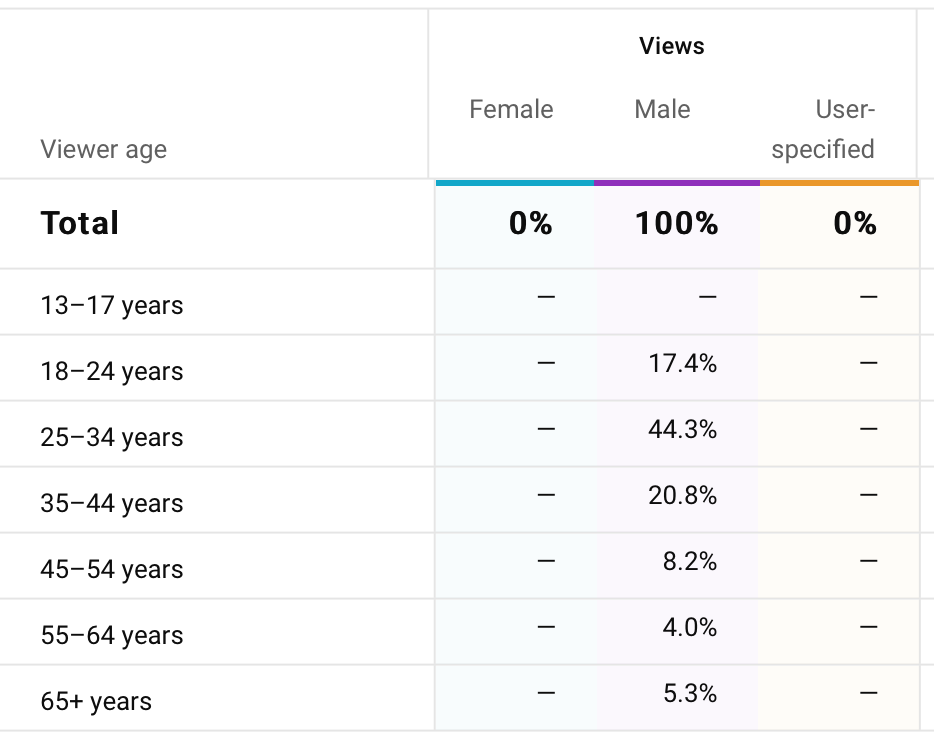

And yet, there’s a frustrating turn. Just who is learning about category theory? Let’s take a closer look:

The numbers are stark: 0% female, 100% male.

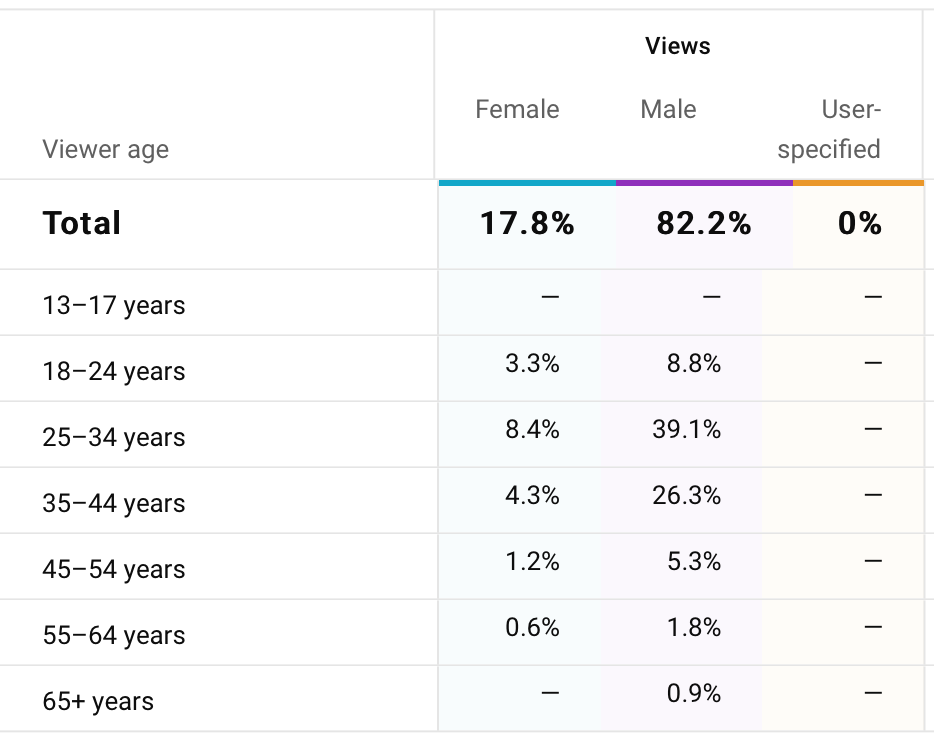

To understand what’s happening better, we must also look back at our typical viewership. Despite our firm belief that category theory and mathematics is for all, our YouTube viewership in general strongly skews male. Nonetheless, in August, the month before this video was published, YouTube identified around one in five of our viewers as female.

Through initiatives such as our Em-Cats seminar and our support of the Adjoint School and Women in Logic, we’re working hard to make our community more inclusive, diverse, and equitable, and in doing so make the gender balance in all parts of the Topos community more representative of the wider world. What we’re observing here, however, which is an established pattern with all our videos, puts us in a bind.

While these statistics must be taken with a grain of salt (the gender information is largely inferred by YouTube, and may not be accurate), the implication remains: when YouTube promotes our videos, it promotes them almost exclusively to viewers that it identifies as male.

More pithily: YouTube thinks category theory is for men.

YouTube is our most powerful outreach platform. It’s revolutionary that videos about category theory, from introductions to the field to the cutting edge of research, are now available to anyone with an internet connection, wherever they may be. Tens of thousands of people watch our videos each month. And in terms of absolute number, we’re confident YouTube makes our work readily available to more women than possible without using the platform.

But YouTube’s algorithmic bias means that using YouTube pits our efforts to create a growing category theory community directly against our efforts to create an inclusive category theory community.

Why do we care about this? Mathematics and science is our common heritage, and everyone deserves to hear about and understand it. More than this, our society is increasingly built upon technologies that are enabled by mathematics, and this trend is only intensifying. For technologies to serve all people, the ability to understand them, shape them, and reshape them must be in all of our hands. So it is essential to the Topos mission that we give everyone a voice in the future of technology. Among many other things, this starts with who sees ‘What is Category Theory?’ on their YouTube feed.

More broadly, we see in this dilemma the urgency of our work. As computer scientists, we understand that these recommendation engines are based on deep learning and modern statistical artificial intelligence techniques, and that in their incredible ability to detect patterns and to optimise, such biases are difficult to combat. We must work towards more accountable structures for artificial intelligence, and a critical step towards accountability will be machine learning methods that are more transparent. New mathematics, such as the work of our connected intelligence team, is essential for this.

At the same time, this problem cannot just be solved with more mathematics. We must examine the ways we teach, raise awareness of how technologies can conflict with our values, and push for change. Technology can disadvantage certain communities in hidden ways. While this example is only a small instance in the many ways our algorithms can be biased, the first step to change will be to bring these hidden aspects to light. So here we are.

Anyway, if you haven’t already, please do check out Paul’s video! And please share it with someone who might not have the opportunity to see it otherwise.